Published in Electronic Design

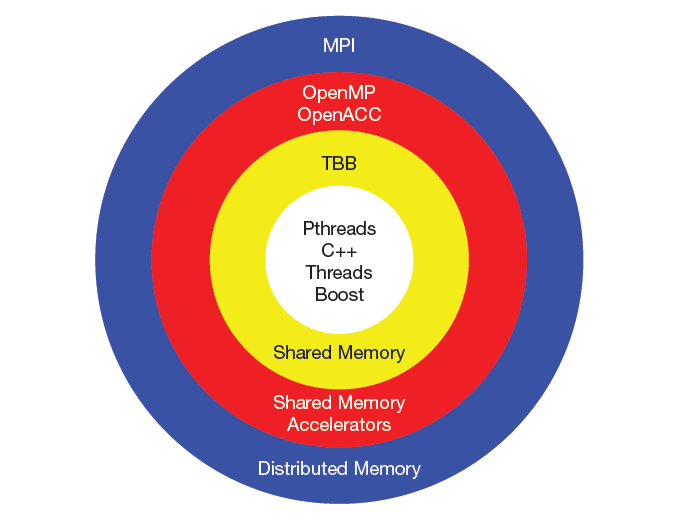

High-performance computing means the use of multiple cores and parallel processing, but there are many ways to address the software implementation.

Most of the legacy code that needs porting to newer systems is serial code, meaning that the code runs on a single processor with only one instruction executing at a time. Modern OpenVPX boards incorporate powerful, multicore processors such as the Intel Xeon-D. The inefficiency of running serial code on these high-performance processors increases the number of boards required in your system, negatively impacting your SWaP-C. General-purpose GPUs (GPGPUs) are also becoming more common in OpenVPX systems due to their massively parallel architecture that consists of thousands of cores designed to process multiple tasks simultaneously.

To modernize your serial code for parallel execution, you must first identify the individual sections that can be executed concurrently, and then optimize those sections for simultaneous execution on different cores and/or processors. Parallel programs must also employ some type of control mechanism for coordination, synchronization, and data realignment. To aid in parallelization, numerous open standard tools are available in the form of language extensions, compiler extensions, and libraries..

So, which method or path is right for you? Let’s explore a few options.

Most of the options have evolved from pthreads, a “C” library defined by the IEEE as POSIX 1003.1 standard, which started circa 1995. POSIX is short for Portable Operating System Interface for UniX. Being a mature technology, pthreads is available on most CPU-based platforms. Remember, a thread is a procedure that runs independently from its main program. Scheduling procedures to run simultaneously and/or independently is a “multi-threaded” program.

Parallel-programming options

Why Would You Want to Use Threads?

Creating threads requires less system overhead than creating processes, and managing threads requires fewer system resources than managing processes. Pthreads share the same memory space within a single process, eliminating the need for data transfers.

Consisting of approximately 100 functions, pthreads’ calls are divided into four distinct groups: thread management, mutexes, conditional control, and synchronization. As the name implies, thread-management functions handle the creating, joining, and attaching of the threads. An abbreviation for “mutual exclusion,” mutexes function as locks on a common resource such as a variable, or hardware device, to ensure that only one thread has access at a given time. The synchronization functions manage the read/write locks and the barriers. Think of a barrier as a stop sign; the threads must wait at this point until all of the threads in their group arrive and then they can proceed to the next operation.