Rugged GPGPU processing cards featuring for machine learning and artificial intelligence

For systems requiring enhanced situational awareness or using deep learning frameworks for artificial intelligence applications, these highly engineered modules provide a field-proven hardware foundation. Answering the growing demand for (AI) and high-performance processing in deployed EW and ISR applications, our 3U VPX GPU modules are designed to deliver advanced capabilities in a highly rugged, SWaP-optimized board. These processing powerhouses leverage the latest GPU advancements from NVIDIA for machine learning and artificial intelligence applications. Equipped with NVIDIA CUDA and Tensor machine learning cores, our 3U VPX GPU boards offer TFLOPS processing capability alongside maximum memory bandwidth for the most compute-intensive tasks.

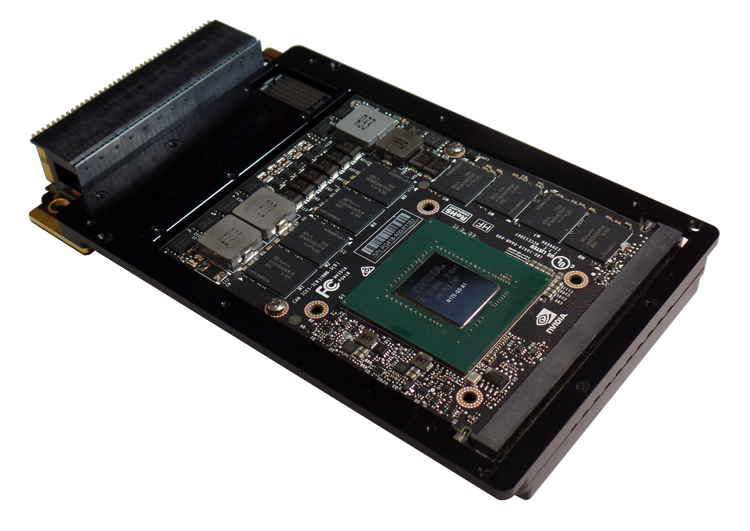

3U VPX GPGPU processor cards

| Product Image | Product Name | Generation | Memory | Memory Bandwidth | Features | PCIe Configuration | Data Sheet |

|---|---|---|---|---|---|---|---|

|

VPX3-4935 | NVIDIA Quadro Turing RTX5000E (3072 CUDA cores, 384 Tensor cores) | 16 GB GDDR6 | 448 GB/s | 4 Video Ports out (DP, DVI, or HDMI) | x16 Gen 3 | |

|

VPX3-4937 | NVIDIA RTX™ 5000 (AD103) GPU with 9728 CUDA cores, 304 Tensor cores, and 76 RT cores | 16 GB GDDR6 | 576 GB/s | Up to 3 DisplayPort outputs and 1 DVI output | x16 Gen 4 | |

|

VPX3-493 | NVIDIA Turing | GDDR6 | x16 Gen 3 | |||

|

VPX3-4924 | NVIDIA Tesla Pascal (2048 CUDA cores) | 16 GB GDDR5 | 192 GB/s | x16 Gen 3 |

Reduce cost, risk, and time to market with COTS hardware

Our broad selection of open-architecture, commercial off-the-shelf (COTS) rugged embedded computing solutions process data in real time to support mission-critical functions. Field proven, highly engineered and manufactured to stringent quality standards, Curtiss-Wright’s COTS boards leverage our extensive experience and expertise to reduce your program cost, development time and overall risk.

The Role of Tensor Cores in Enabling AI and Machine Learning

Tensor cores are indispensable for performing the types of calculations needed for artificial intelligence (AI) and machine learning. The role of AI and machine learning in defense applications is on the rise, making tensor cores critical for defense. In this white paper, you will discover how tensor cores are used in AI and machine learning, and ways to incorporate tensor cores into extremely rugged applications.

How Can I Teach My Machine to Learn?

Read this white paper to learn about:

- Supervised, unsupervised, and semi-supervised approaches to machine learning

- Classification algorithms

- Regression analysis

- Clustering

- Dimensionality reduction

- Machine learning frameworks, including TensorFlow, Keras, PyTorch, MXNet and Gluon, and Caffe