Latency, the measure of how long it takes data to get from one device to another across a network, can be critical to the performance of connected embedded systems. For applications that rely on high-speed delivery of real-time data, latency can be a serious concern that puts safety and mission success at risk.

Wide area networks that include multiple hops over long-distance links can face latency in the hundreds of milliseconds, resulting in two-way voice conversations that feature awkward delays and interruptions. Latency on a switched Ethernet LAN is typically much lower, and measured in microseconds. Still, microseconds can matter to industrial applications that control motors based on inputs from networked sensors, or radar processing systems that track multiple fast-moving targets.

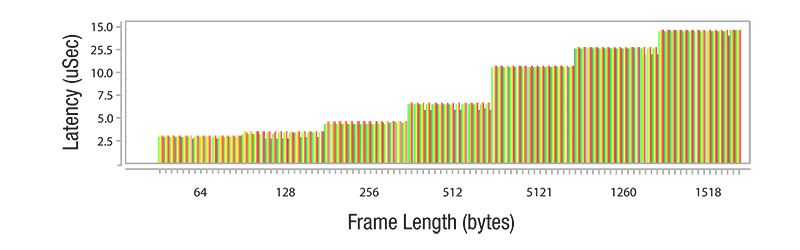

Gigabit Ethernet Switch Latency for Various Frame Lengths, RFC2544 Report