Ethernet has become the primary means of connecting modern embedded systems. It delivers high performance at low cost and is supported in many off-the-shelf products. With the ability to connect a wide variety of systems, many integrators are choosing to design their platforms around a converged Ethernet network that carries traffic from multiple applications. Using a single Ethernet network for multiple applications provides the opportunity to reduce weight, power, and complexity by using a single centralized switch and twisted-pair cabling to replace multiple single-purpose buses.

Moving to a converged network can have numerous benefits but also presents a challenge. Systems connected over serial buses or dedicated networks were easy to design and analyze, with predictable performance and latency. In contrast, a converged network carrying multiple types of traffic has the potential for interference, where traffic from one application will delay traffic from another. For applications that are not time-sensitive, such as messaging and file transfers, delays may not be an issue. But for applications involving voice, video, or real-time sensor data, delays or dropped traffic may have unacceptable effects.

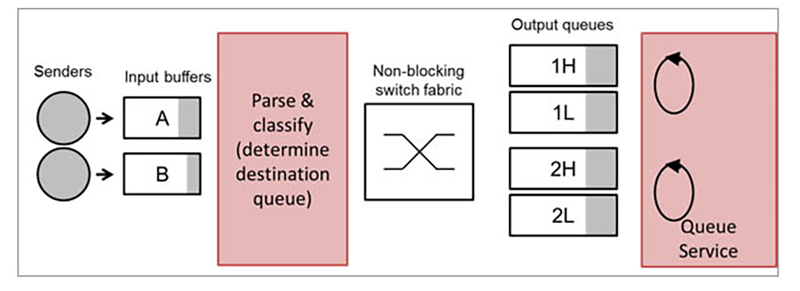

To address the challenges of converged networks - supporting multiple applications with varying service requirements - modern networking equipment implements a variety of “Quality of Service” (QoS) features. These features allow traffic from critical applications to be prioritized so that it is delivered without delays or drops. To take advantage of these features, integrators must properly configure their networks to provide the right treatment for traffic from different applications. This paper provides an introduction to the principles of QoS in Ethernet and IP networks, with an emphasis on practical implications for embedded systems.

Figure 1: QoS in an Ethernet Switch

Download the white paper to learn more.

- Ethernet switching

- IP Quality of Service

- Real-time networking