The Next Generation Through Partners

Whether you’re building a 3U or 6U VPX system, Peripheral Component Interconnect Express (PCIe) plays an important role for either the expansion plane or data plane connectivity. With Curtiss-Wright Defense Solutions’ OpenHPEC Accelerator Suite, the details of non- transparent bridges (NTB) can be completely hidden from the user while providing a standard API interface at the application layer. By utilizing partnerships like Intel’s Xeon-D System on Chip (SoC) processors, our latest products feature significant advantages for the rugged, harsh environments of our embedded space. Curtiss-Wright has partnered with several technology-leading companies to pave the future in switching capabilities, instrumentation of data storage, data processing, and data ingest in both 6U and 3U form factors to ensure the latest and greatest fabrics and middleware. By building a rapport and maintaining these partnerships, Curtiss-Wright offers customers the rugged products, support and the software development tools required to create the next generation High Performance Embedded Computing (HPEC).

Fabrics and Middleware

The introduction of OpenVPX in 2009 created the reality of open standard eco-systems and enhanced the building of next generation rugged computer systems. Aligning with Department of Defense’s (DoD) open standards requirements, Curtiss-Wright’s Fabric40 products are at the forefront of new technology for both 3U and 6U OpenVPX Systems. By utilizing our Fabric40 products for standard High Performance Computing (HPC) technologies like processors and interconnects, new technology can be standardized easily and efficiently for future programs.

OpenVPX Control, Expansion and Data Planes

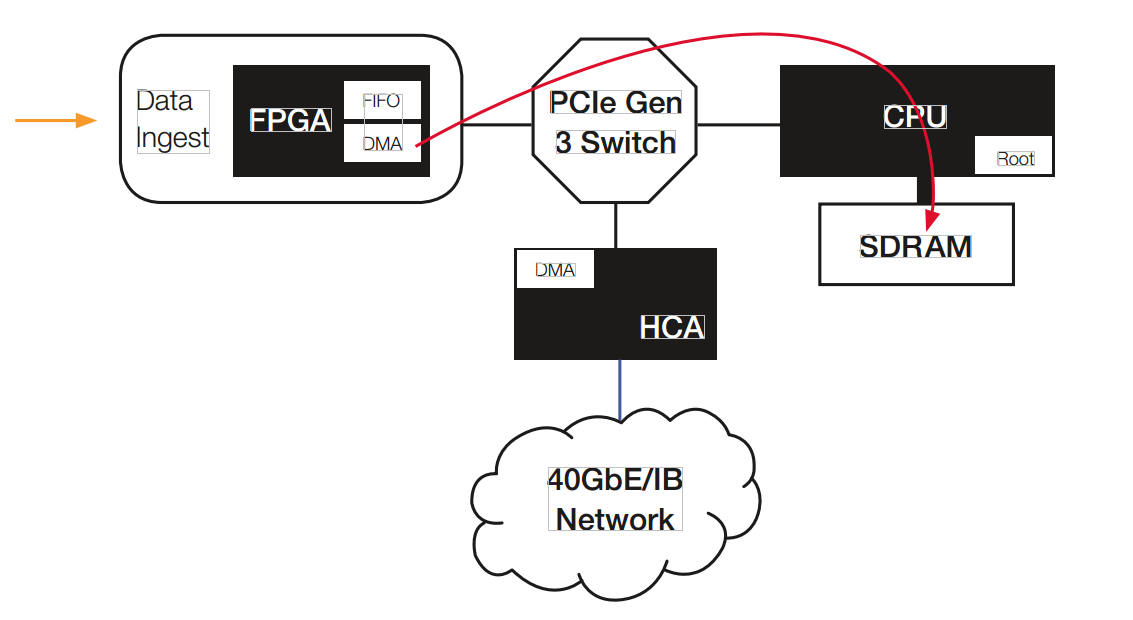

PCIe plays an important role for either the Expansion Plane or Data Plane connectivity. The typical use case for PCIe is to connect one root complex device (i.e. a processor) to one or more end points (i.e. GPU or FPGA). With the addition of non-transparent ports (NTP), NTB, and even Direct Memory Access (DMA) engines, PCIe can be used as a data plane fabric allowing multiple root complex devices to be connected on the same PCIe bus. Connecting multiple processors and setting up the NTBs can be a software nightmare when you are building a cluster based on PCIe. With Curtiss-Wright’s OpenHPEC Accelerator Suite, the details of NTB can be completely hidden from the user while providing a standard API interface at the application layer.

Moving Data

Multicasting provides an interesting capability for data ingest in low latency systems. Instead of decomposing the data in the data ingest FPGA and creating multiple transfers, multicast can send all the data to all the nodes in the cluster using just one transfer. This is extremely useful in PCIe-based systems where the data must be accessible by multiple nodes.

Download our Data Transport for OPENVPX HPEC White Paper to learn more about:

- OpenVPX

- CPUs, GPUs, FPGAs

- Fabric40

- Control Plane

- Data Plane

- Expansion Plane

- Mellanox Connect-3

- PCI Express Switching

- Remote Direct Memory Access